Sandbox Success, Production Failure: Building Test Harnesses That Bridge the Carrier API Reliability Gap

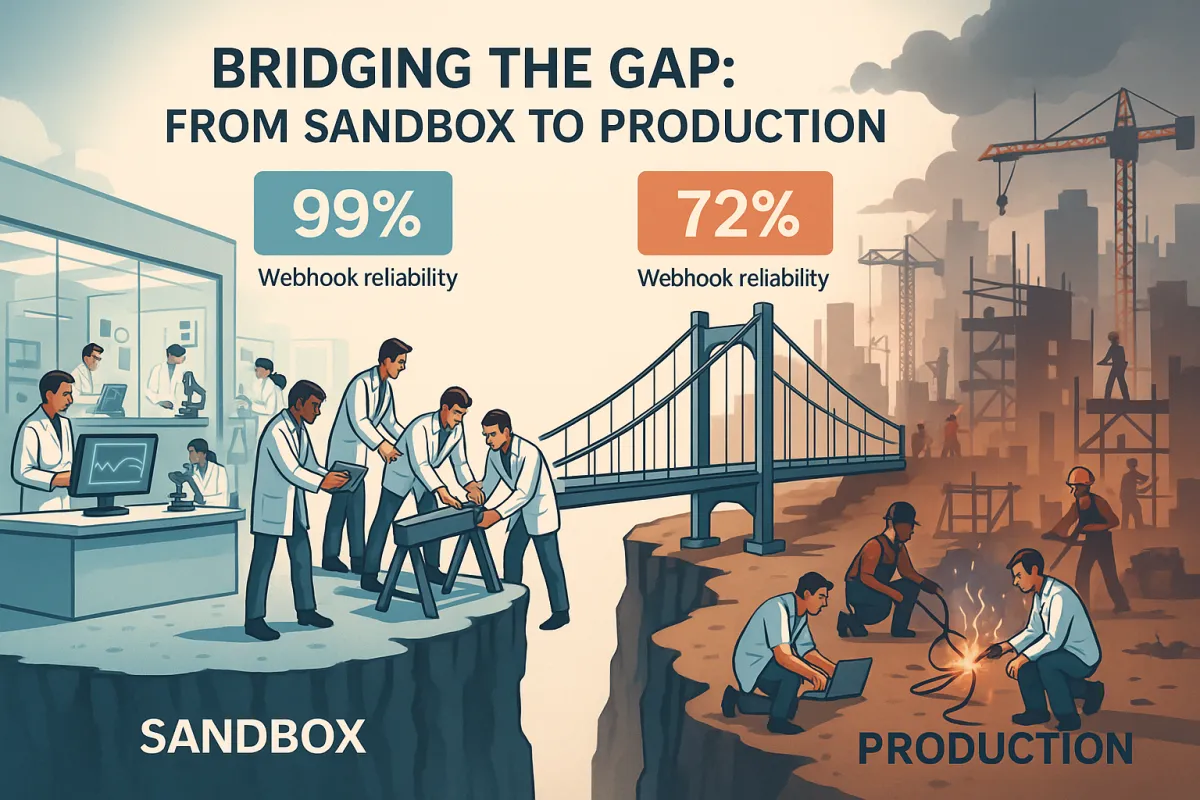

Your webhook endpoints pass every sandbox test. Your rate requests return perfect responses. Your authentication flow works flawlessly. Then you deploy to production and 72% of implementations face reliability issues within their first month. Sound familiar?

The carrier API testing reliability gap isn't just about different environments—it's about fundamentally different challenges that sandbox testing simply cannot replicate. Average weekly API downtime rose from 34 minutes in Q1 2024 to 55 minutes in Q1 2025, yet most integration teams still rely on sandbox environments that achieve 99%+ webhook reliability because they lack production complexity.

The 72% Problem: When Sandboxes Lie

Recent status pages tell the real story. ShipEngine's status page shows "investigating reports of the following errors being returned at a high rate when attempting to get rates and create labels: 'carrier_id xxxxxxx not found' 'warehouse_id yyyyyyy not found'" while Shippo reports "experiencing difficulties in receiving USPS Tracking updates" with tracking updates being delayed until "the carrier's API is restored". Both platforms acknowledge webhook delivery issues as routine occurrences.

The disconnect runs deeper than individual carrier problems. A 2025 Webhook Reliability Report shows that "nearly 20% of webhook event deliveries fail silently during peak loads", while transient network issues cause another layer of complexity. ThousandEyes reports that "over 30% of API delivery failures trace back to transient connectivity faults rather than server-side issues". These network hiccups are invisible in controlled sandbox environments but common in production multi-datacenter deployments.

Authentication handling creates another blindspot. USPS webhooks survived authentication token renewal seamlessly, while European carriers like PostNord required webhook re-registration after credential updates. DHL Express fell somewhere between - webhooks continued working but with degraded reliability for 4-6 hours post-renewal.

Why Standard Testing Frameworks Miss Carrier-Specific Failures

Generic API monitoring treats all endpoints the same—a fundamental mistake when dealing with carrier integrations. The rates that you get in the sandbox may not match the rates that you get in production. Any negotiated rate discounts that you have are not applied in the sandbox and some rates are "dummy" rates to prevent abuse of our sandbox for production purposes.

Even worse, webhooks and workflows that require webhooks (such as batching) are not available in the sandbox environment. This creates a false sense of confidence when moving to production where webhook reliability becomes business-critical.

The financial impact compounds quickly. Integration bugs discovered in production cost organizations an average of $8.2 million annually. Contract testing catches these issues early, reducing debugging time by up to 70% and preventing costly downstream failures.

Building Production-Grade Test Harnesses

Effective carrier API testing starts with understanding that different carriers behave differently across environments. Your test harness needs to account for these variations systematically.

Start with environment parity testing. Start with API inventory across all environments. Your carrier integrations span sandbox, staging, and production. Each environment connects to different carrier endpoints, authentication systems, and webhook targets. Map all of it.

Authentication token renewal testing becomes crucial when you realize that different carriers handle this process differently. Build automated tests that simulate token renewal during active webhook subscriptions. Monitor webhook delivery success rates before, during, and after credential updates.

Network failure injection separates robust integrations from fragile ones. A dependable webhook API includes thoughtful design decisions that account for network latency, failures, retries, security, and observability. Whether you're sending or receiving webhooks, the best practices listed below will help you design powerful, production-grade webhook systems.

Webhook Reliability Benchmarking

Your webhook endpoints need speed above all else. Webhook endpoints should emphasize speed. When an event is received, your server should provide a successful HTTP status code (like 200 OK) within a few seconds. This prompt acknowledgement avoids unwanted retries from the sender.

Test different failure scenarios systematically. Goal: Test how your application handles various HTTP error codes and failure conditions. Set up your mock API to return different error responses based on request parameters. This tests your retry logic and error handling mechanisms.

Multi-Environment Deployment Strategy

Production-grade testing requires deploying webhook endpoints across multiple environments while maintaining consistent monitoring. European platforms like nShift and Cargoson handled webhook storms better, likely due to their regional focus and deeper carrier relationships. Cargoson's webhook implementation showed the smallest sandbox-to-production reliability gap in our testing, particularly for DHL and DPD integrations.

Consider multiple webhook endpoints per carrier for critical integrations. Consider multiple webhook endpoints per platform for critical integrations. Platforms supporting multiple webhook URLs allow A/B testing of endpoint reliability and provide automatic failover when primary endpoints fail. The operational complexity is worthwhile for high-volume integrations where webhook failures directly impact revenue.

Continuous Discovery and Monitoring

Effective carrier API monitoring goes beyond simple uptime checks. Standard monitoring tools miss the critical patterns unique to carrier APIs. While Datadog might catch your server metrics and New Relic monitors your application performance, neither understands why UPS suddenly started returning 500 errors for rate requests during peak shipping season, or why FedEx's API latency spiked precisely when your Black Friday labels needed processing.

Monitor carrier-specific failure patterns. Carrier APIs also have unique failure modes. Rate shopping might work perfectly while label creation fails silently. Tracking updates could be delayed by hours without any HTTP error status. Your generic monitoring won't catch these carrier-specific problems until they've already impacted shipments.

Measuring Success Beyond Green Tests

The most sophisticated retry strategies adapt to real-world patterns. Companies like Slack publicly discussed switching "from fixed-interval retries to adaptive algorithms, which lowered lost event rates by 30%".

Build monitoring that understands normal operational patterns. Implement behavioral monitoring that understands normal patterns. Train models on successful shipping flows, typical rate request volumes, and expected tracking update frequencies. Detect anomalies that suggest compromise or abuse.

Platform Considerations and Vendor Reality

When choosing between direct carrier APIs and integration platforms, factor in the testing overhead. Platforms like Cargoson, nShift, EasyPost, and ShipEngine each abstract different levels of carrier complexity, but they also introduce their own reliability challenges.

EasyPost performed more consistently, though still showed 15% higher failure rates in production compared to sandbox. Their European carrier connections proved particularly unreliable during business hours (9 AM - 5 PM CET).

The monitoring complexity increases with each abstraction layer. Legacy systems often provide basic uptime monitoring with limited rate limit insights. Newer platforms like nShift and Transporeon include carrier-specific monitoring dashboards. Cargoson provides real-time visibility into rate limit consumption across all carrier integrations, with predictive alerting when approaching limits.

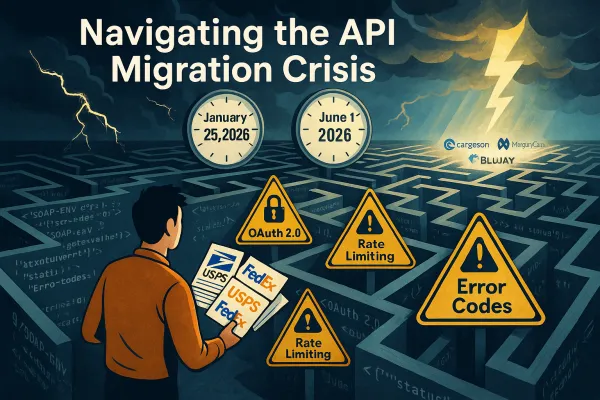

Future-Proofing Your Test Infrastructure

Rate limiting behavior varies significantly between carriers and affects testing strategies. Your multi-carrier TMS environment amplifies these risks because rate limits from FedEx, UPS, DHL, and your regional partners don't just add up—they interact unpredictably during peak demand.

Build failover logic with business context understanding. The key is building failover logic that understands business context, not just technical failure. If your primary carrier for Germany-to-Poland shipments hits rate limits during peak season, the system should automatically route requests to your secondary carrier for that lane while preserving service level requirements.

Start by auditing your current exposure across all carrier integrations. Start by auditing your current rate limit exposure across all carrier integrations. Document each carrier's specific limits, peak usage patterns, and historical failure points. Then implement monitoring before optimization—you need visibility into current performance before building smarter controls. Finally, test your failover logic during low-impact periods rather than discovering gaps during peak shipping season.

The 72% production failure rate isn't inevitable—it's the result of testing approaches that don't match production realities. Build test harnesses that understand carrier-specific behaviors, monitor business-critical patterns, and validate performance under real-world conditions. Your integration reliability depends on bridging that sandbox-to-production gap systematically, not hoping your next deployment will be different.