Production-Grade Carrier API Monitoring: Building Systems That Catch Authentication Cascades and Compliance Changes Before They Break Shipments

European shippers learned October 2025's lesson the hard way. While your application monitoring tools registered healthy systems and normal CPU usage, customers couldn't check out. 72% of implementations face reliability issues within their first month of production deployment, and between Q1 2024 and Q1 2025, average API uptime fell from 99.66% to 99.46%, resulting in 60% more downtime year-over-year. In Q1 2024, APIs saw around 34 minutes of weekly downtime. In Q1 2025, that rose to 55 minutes.

The difference between successful carrier API monitoring and generic application monitoring comes down to understanding shipping domain failures. Carrier APIs also have unique failure modes. Rate shopping might work perfectly while label creation fails silently. Tracking updates could be delayed by hours without any HTTP error status. Your generic monitoring won't catch these carrier-specific problems until they've already impacted shipments.

Why Generic API Monitoring Fails for Carrier Integrations

Standard monitoring tools miss the critical patterns unique to carrier APIs. While Datadog might catch your server metrics and New Relic monitors your application performance, neither understands why UPS suddenly started returning 500 errors for rate requests during peak shipping season, or why FedEx's API latency spiked precisely when your Black Friday labels needed processing.

Generic monitoring treats all APIs the same. That assumption breaks quickly with carriers. A 2-second response time during checkout rate shopping creates cart abandonment. The same 2-second delay for tracking updates on delivered packages doesn't register as a customer issue. Your traditional monitoring tools can't distinguish between these scenarios.

Consider platform approaches. For integration platforms, solutions like Cargoson build monitoring into their carrier abstraction layer. This means you get carrier-specific health metrics without building custom monitoring for each API. Compare this approach against managing individual carrier monitoring with platforms like ShipEngine or EasyPost. Cargoson, nShift, and EasyPost each handle carrier monitoring differently, affecting your operational visibility.

Authentication Cascade Detection: The October 2025 Wake-Up Call

The most insidious failure pattern involved token refresh logic breaking down under load. When La Poste's API started returning 401 errors for previously valid tokens, most monitoring systems classified this as a temporary authentication issue. But the real problem was more complex.

Their OAuth implementation couldn't handle concurrent refresh requests from the same client. If your system made simultaneous calls during token expiry, you'd get a mix of successful authentications and failures. Your monitoring would show 85% success rates while your actual order processing ground to a halt.

Here's what effective authentication monitoring detects: sudden spikes in 401 responses from previously authenticated sessions, increased latency specifically on token refresh endpoints, and patterns where subsequent API calls fail after successful authentication. Most teams discover these issues when customers start complaining about failed checkouts.

Business-Critical vs Background Monitoring: Setting the Right SLAs

Your monitoring thresholds should reflect actual business requirements, not arbitrary technical benchmarks. Rate quote failures during checkout matter more than 500ms tracking updates for shipped orders. API monitoring systems allow these organisations to track where the APIs perform as expected. The monitoring needs to track these negotiated SLAs per carrier, not generic uptime metrics.

Set monthly error budgets for each carrier based on your business requirements. A high-volume shipper might need 99.95% successful rate shopping, while occasional shippers can accept 99.5%. Track burn rate, not just absolute errors. If your monthly error budget allows 100 failed requests, but 50 failures happen in the first week, you're burning budget too quickly. Alert on these trends before you exhaust your error budget and breach customer SLAs.

Consider real operational costs. The real cost extends beyond immediate cart abandonment. Manual rate shopping during outages ties up customer service teams. Delayed label creation pushes shipments to the next business day. Failed tracking updates generate support calls from worried customers. Each minute of carrier API downtime creates a cascade of operational overhead that multiplies across your entire shipping operation.

Monitoring Architecture for Multi-Tenant Platforms

Most carrier integration platforms serve multiple shippers. Your monitoring architecture must isolate performance data and alerting per tenant while efficiently sharing carrier connections.

Design tenant-specific dashboards that show only relevant carrier performance. A tenant shipping exclusively within the EU doesn't need alerts about USPS domestic service issues. Use tenant-specific error budgets - a high-volume shipper might have tighter SLAs than occasional users. This segmentation helps carriers like Cargoson, nShift, and EasyPost manage diverse customer expectations without alert noise.

Regulatory Compliance Monitoring: The European Challenge

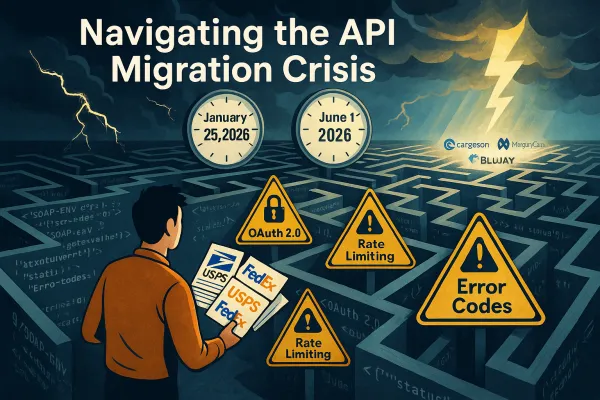

European carriers experienced regulatory compliance issues that created API behavior changes without proper deprecation warnings. To comply with new customs regulations, carriers, including USPS and others, are now requiring six-digit Harmonized System (HS) codes on all international commercial shipments. Effective September 1, 2025, shipments without these codes may be delayed or rejected by customs authorities.

The ICS2 compliance changes created API payload requirements that weren't announced through traditional deprecation cycles. Carriers simply started rejecting requests that worked perfectly the day before. Your monitoring needed to detect these rejection patterns and correlate them with regulatory announcements.

Platforms like Cargoson, nShift, and Descartes build compliance monitoring into their carrier integration layers, but if you're managing direct carrier connections, you need to track these changes manually. Create alerting for service restrictions that affect your shipping regions. When PostNL announces service suspensions to specific postal codes, your system should automatically adjust carrier selection for affected shipments.

Implementation Patterns That Actually Work in Production

This architecture separates concerns by function (rate shopping, labelling, tracking) rather than by carrier. Each monitor understands the specific response patterns and failure modes for its function across all carriers. The Carrier Health Engine maintains baseline performance profiles for each carrier and can detect when UPS response times suddenly jump or when DHL starts returning malformed XML.

Build carrier-specific performance profiles. Design weighted health scores that reflect your actual usage patterns. If 80% of your volume goes through UPS Ground service, weight UPS Ground performance heavily in your overall health score. A five-minute outage in UPS Next Day Air might barely register, while the same outage in UPS Ground creates immediate business impact.

Consider regional differences in your scoring. A carrier might perform excellently for domestic shipments while struggling with international rates. Your health scoring should reflect these nuances - Manhattan Active, Blue Yonder, and Cargoson all implement regional performance tracking for this reason.

Move beyond simple uptime percentages to business-relevant SLOs. Instead of "UPS API is 99.9% available," track "Rate shopping completes successfully within 2 seconds for 95% of requests." This SLO directly relates to customer experience during checkout.

Building Incident Response Procedures for Carrier Failures

Document your incident response procedures with specific carrier failure scenarios. When La Poste's authentication fails, your team should know whether to implement immediate carrier failover or wait for the auth system to recover. These decisions require carrier-specific knowledge that most monitoring tools don't provide.

Create carrier-specific runbooks. When DHL Express starts returning 500 errors on Monday mornings, investigate their maintenance schedule patterns. If DHL Express API failures spike on Mondays, investigate their system maintenance schedules. We tracked this pattern and found that predictable maintenance windows create cascading failures when adaptive algorithms don't account for carrier-specific downtime patterns.

Circuit breaker patterns become essential in multi-carrier environments. Implement circuit breaker patterns to prevent cascading failures and improve system resilience under load. However, standard circuit breaker implementations need modification for carrier mixing—you need business logic that understands which carriers can substitute for specific shipping lanes.

Tools and Platforms: What Works for Carrier Integration Teams

Platform comparison reveals interesting patterns. EasyPost handles burst traffic well but struggles with sustained high volume. nShift provides excellent visibility but can be slow to adapt. ShipEngine offers good balance but limited carrier coverage. Cargoson provides real-time visibility into rate limit consumption across all carrier integrations, with predictive alerting when approaching limits.

Vendor-agnostic monitoring becomes crucial when managing platforms like EasyPost, nShift, and Cargoson simultaneously. Our testing showed that platform-specific monitoring tools create blind spots when problems span multiple integrations. You need monitoring that can correlate failures across different platforms and direct carrier connections.

European platforms like nShift and Cargoson handled webhook storms better, likely due to their regional focus and deeper carrier relationships. Cargoson's webhook implementation showed the smallest sandbox-to-production reliability gap in our testing, particularly for DHL and DPD integrations.

For European shippers, platforms with strong regional carrier relationships (nShift, Cargoson) showed measurably better webhook reliability than global platforms adapted for European markets. The difference was most pronounced for DHL, DPD, and GLS integrations. Global platforms like EasyPost and ShipEngine excel at USPS and FedEx webhook reliability but struggle with European carrier complexity.

Your carrier API monitoring setup decides whether you catch integration failures before customers do. Focus on business outcomes, build carrier-specific intelligence, and choose tools that understand shipping domain logic. When carrier APIs fail, you'll have the visibility and automation needed to maintain service while others scramble to restore functionality.