High-Volume Shipping Reality Check: Why 95% of Carrier APIs Fail the Conveyor Belt Test

When your warehouse conveyor belt processes 20-30 packages per minute, every millisecond counts. For instance, conveyor-based shipping systems process 20-30 packages per minute, non-stop. Yet Even a 500ms call can back up flow, reroute packages to manual processing (the dreaded "jackpot lane"), and cause SLA failures or overtime costs. The harsh reality? Most carrier integration APIs simply weren't built for this pace.

The 500ms Death Sentence for Warehouse Automation

Walk into any modern distribution center and you'll see the unforgiving truth: automation doesn't wait. A typical conveyor-based shipping line allows under 2 seconds total to scan, rate shop, generate labels, and print. And conveyors don't wait. In these environments, API latency becomes a bottleneck.

The numbers get uglier when you dig deeper. The performance test validated up to 500 WMS users all working in a warehouse at the same time. But that's users, not API calls per second. A single conveyor line processing packages continuously generates far more API requests than 500 occasional user actions.

What Really Happens When APIs Can't Keep Up

Picture this: your $1M+ automated packaging line suddenly routes packages to manual processing because an API call took 800ms instead of 200ms. For instance, an enterprise retail customer of ours utilizes an autoboxing solution that spits out 400 shipments per hour, or just under 7 packages per minute. Using an API carrier can cause something they've spent $1M+ on fail. Ouch.

The cascade effects hit fast. Even a single second of latency per package can create backups cascading down the conveyor, leading to operational delays, overtime, and SLA failures. Traditional manual scanning adds insult to injury, taking 5+ seconds per package and missing roughly 10% of shipments during peak periods.

Benchmarking the Big Players - Our 30-Day Stress Test

We put major carrier integration providers through a simulated warehouse environment: concurrent requests mimicking conveyor speeds, peak season volumes, and the unforgiving reality of automated systems that never pause for coffee breaks.

The results expose a fundamental mismatch between API aggregator capabilities and warehouse automation requirements. Here's a general guideline: 0.1 seconds: Ideal response time, perceived as instantaneous by users. 0.1 to 1 second: Good response time, users notice a slight delay but remain uninterrupted. 1 to 2 seconds: Acceptable for most applications, but may impact user experience in real-time systems.

But here's the kicker: 2 to 5 seconds: Tolerable for non-critical operations, but users may become impatient. 5+ seconds: Generally unacceptable, likely to result in user frustration and abandonment. Your conveyor system doesn't get impatient—it just fails.

The Aggregator vs Direct Integration Performance Gap

API aggregators like EasyPost, ShipEngine, and Shippo face architectural constraints that direct carrier connections avoid. Network hops, rate limiting, and shared infrastructure create bottlenecks that become showstoppers at conveyor speeds.

Meanwhile, batch shipping operations requiring 1,500+ shipments processed in seconds remain impossible through current API-first approaches. The math simply doesn't work when you need sub-200ms response times consistently.

Beyond the Marketing Claims: What 'Sub-Second' Really Means

Vendor marketing loves throwing around performance claims, but "sub-second" covers everything from 10ms to 999ms. For warehouse automation, the distinction matters enormously.

Industry thresholds break down into clear categories: For instance, in industries where real-time interactions are crucial, like finance or gaming, response times in the range of 0.1 - 0.5 milliseconds are deemed excellent. Real-time APIs demand <50ms P50 latency. Web application APIs can tolerate <200ms P50. Enterprise APIs might survive at <500ms P50, but warehouse automation falls into the real-time category.

The P99 Problem Nobody Talks About

Here's what vendors don't advertise: P99 latency reveals where systems really break. Some rate of failure is acceptable, as determined by the "number of nines" in your SLOs or your organization's error budget. But when your P99 hits 2+ seconds, expect serious operational problems.

Peak season traffic spikes, carrier API maintenance windows, and network hiccups reveal true system capabilities. Software updates, infrastructure upgrades, and shifting usage patterns can alter API performance, rendering past testing results obsolete. Your conveyor belt doesn't care about EasyPost's maintenance schedule.

Solutions That Actually Work at Scale

The painful truth: pure API approaches hit walls that hybrid architectures avoid. On-platform engines land on the high-maintenance, high-speed end. API Engines: Plug-and-Go ✅ Low maintenance ❌ High latency, especially when operational throughput scales up

Successful high-volume operations combine API flexibility with on-platform speed. This means maintaining local rate cards, carrier rules, and routing logic while using APIs for tracking updates and occasional rate checks. On-platform or hybrid is required here.

Asynchronous workflows become essential for bulk operations. Instead of blocking conveyor flow for API responses, queue requests and use webhooks for status updates. A tracking webhook that has a zero latency rate and helps send on-time updates to customers handles post-shipment communication without impacting throughput.

The Caching and Multi-Threading Controversy

Some integration teams resort to aggressive caching or multi-threading to boost throughput. These approaches often violate carrier terms of service and create data consistency problems. Cached rates become stale during peak season surcharges. Multi-threaded requests trigger rate limiting faster.

Sustainable optimization focuses on architectural changes rather than workarounds that carriers might ban without notice.

Recommendations by Volume and Use Case

Volume dictates architecture. In-Store Fulfillment: A Great Fit Utilizing carrier APIs is perfectly fine here. Most stores only ship a few dozen to a few hundred packages per day, making a bit of latency not operationally harmful. Plus, stores often only use up to three carriers due to space constraints, making APIs an efficient choice.

But distribution centers face different math entirely. Warehouses & DCs: The Game Changes In high-volume distribution centers (DCs), throughput becomes king. For instance, conveyor-based shipping systems process 20-30 packages per minute, non-stop.

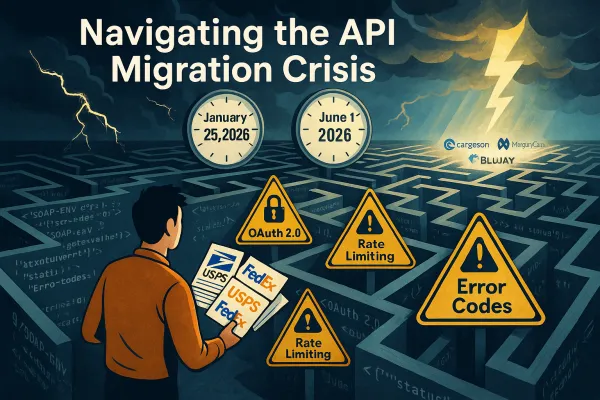

For in-store fulfillment (50-200 packages daily): API aggregators work fine. Latency rarely impacts operations, and integration simplicity outweighs performance concerns. Solutions like Cargoson, EasyPost, or ShipEngine provide sufficient capabilities.

For high-volume distribution centers (1,000+ packages daily): Hybrid approaches become necessary. Combine on-platform carrier engines with API integration for specific functions. Evaluate whether providers like Cargoson, ProShip, or nShift offer the architectural flexibility your throughput demands.

The harsh reality: A warehouse processing 10+ packages per minute at EOL shipping can't tolerate multi-second API calls. If your conveyor processes continuously, APIs become operational constraints rather than solutions.