Enterprise TMS Rate Limiting at Scale: Why 73% of Multi-Carrier Integrations Fail Under Load and How to Build Systems That Don't

Watching a €2.1 million TMS rollout crumble because carrier APIs couldn't handle the traffic surge during peak season isn't just expensive—it's the kind of failure that threatens the survival of mid-sized German automotive parts manufacturers, who've already invested six months and €800,000 before realizing their carrier network across 12 countries can't integrate without custom development. Sound familiar?

The numbers tell the real story. According to the Standish Group's Annual CHAOS 2020 report, 66% of technology projects end in partial or total failure, and McKinsey research shows that 17% of large IT projects go so badly they threaten company existence. For enterprise TMS systems handling high-volume, multi-modal freight operations, these stakes get higher when you add the 76% of logistics transformations that fail to achieve their performance objectives.

Here's what most integration teams miss: connectivity is crucial to TMS success because no other piece of supply chain management technology has to communicate with as many different parties as a transport management system—ERP, order management systems, warehouse management systems, finance, plus trading partners, vendors, customers, 3PLs, carriers and more. When your rate limiting strategy can't handle this complexity, you're building on quicksand.

The Hidden Crisis in Enterprise TMS API Performance

Enterprise TMS platforms face a perfect storm of API challenges that simple rate limiting can't solve. Implementing API integration into a TMS can be overwhelming for companies without EDI connection fundamentals, especially when traditional manual processes based on Excel spreadsheet exports undermine the efficiency of new freight management systems. The result? Integration failures cascade across your entire European network.

Consider the complexity: your TMS needs to pull real-time rates from 15+ carriers, process shipment bookings across parcel and LTL modes, handle customs documentation for cross-border freight, and sync with warehouse management systems—all while maintaining sub-second response times during peak shipping periods.

Your TMS needs to integrate with carriers across 15 countries, feeding information back to various warehouse management systems while planning for API failures, data format differences, and carriers who still only accept EDI formats from 1995. When carriers like DHL process thousands of rate requests per minute while regional specialists in rural Italy still fax rate sheets, your rate limiting strategy needs surgical precision.

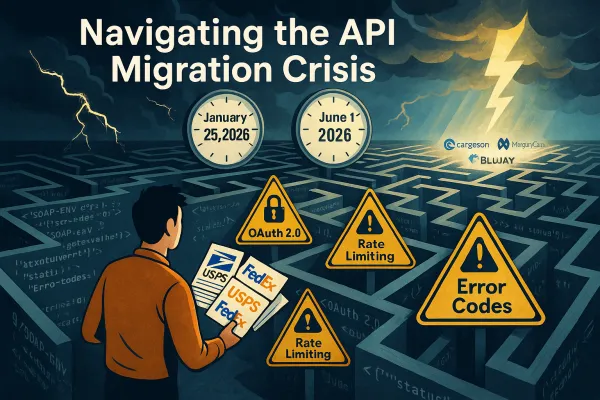

The enterprise context makes this worse. Legacy platforms like MercuryGate have limited open API support restricting automation for international and intermodal logistics, with their "glaring weakness in lack of a modern API, making integrations and automations very difficult to setup." Meanwhile, modern solutions like Cargoson, Manhattan Active, and Blue Yonder must handle the complexity that legacy systems couldn't address.

Why Standard Rate Limiting Breaks Down for Freight Operations

Standard API rate limiting assumes predictable traffic patterns and homogeneous requests. Enterprise freight operations break both assumptions. API is relatively new in the freight industry with less popularity than EDI, but API connectivity is increasing, especially among parcel and LTL freight carriers. This creates a hybrid environment where your rate limiting must accommodate both modern API calls and legacy EDI batch processing.

Multi-modal complexity compounds the challenge. Parcel APIs might handle 1000 requests per minute for quick rate quotes, while LTL carriers need complex payload validation for hazmat classifications and dimensional weight calculations. Ocean freight requires entirely different patterns—high-volume rate requests followed by long-duration booking confirmations that can span hours.

The fixed window counter approach defines a time window and maximum request count, but when the counter exceeds the limit, further requests are rejected until the window resets, leading to traffic spikes at window boundaries as clients wait for the reset and then immediately retry. During Monday morning rate shopping or end-of-month shipment surges, this creates exactly the bottlenecks enterprise TMS systems can't afford.

Microservice architectures make this exponentially harder. Modern TMS platforms split functionality across services: rate shopping, carrier selection, booking execution, tracking updates, and document generation. Each service has individual limits, but end-to-end operations span multiple services. When your rate shopping service hits limits but booking remains available, you create partial transaction failures that are nearly impossible to debug.

The Enterprise Context: Beyond Simple Request Counting

Enterprise TMS deployments serve diverse workflows with wildly different throughput expectations. Your procurement team might batch-process 10,000 rate requests during quarterly negotiations, while customer service needs instant access to shipment status updates. Dynamic rate limiting can cut server load by up to 40% during peak times while maintaining availability. But generic dynamic limits don't understand freight business logic.

Identity management adds another layer of complexity. Enterprise customers often use service accounts, API keys for different business units, and user-level authentication all within the same deployment. Bespoke limits are critical for specific clients whose unique business impact, scalability, or associated infrastructure costs warrant special consideration—not just about protection but enabling these users to make the most of the API without compromising system integrity.

Static policies throttle operations during the spikes that matter most. When your largest customer launches a new product line and needs expedited shipping across Europe, generic rate limits become business blockers rather than protections.

Production-Grade Rate Limiting Architecture for TMS

Building enterprise TMS rate limiting requires understanding freight-specific traffic patterns. Several fundamental patterns form the foundation of effective rate limiting, and understanding these patterns and their trade-offs is essential for implementing a solution that balances protection with performance. But freight operations need patterns beyond the standard approaches.

Implement sliding window counters with freight-aware logic. Instead of simple request counting, weight requests by complexity: rate shopping for 50 LTL shipments consumes more resources than 50 parcel tracking queries. Use carrier response times to dynamically adjust limits—if UPS APIs are responding slowly, reduce concurrent requests to prevent cascading delays.

Modern systems lower limits when error rates go beyond 5% and adjust concurrent requests if latency crosses 500ms, using adaptive algorithms like Token Bucket and Sliding Window to manage real-time adjustments effectively. For TMS systems, monitor carrier-specific error rates. When DHL's API starts throwing 429 responses, temporarily shift traffic to alternative carriers rather than backing off entirely.

API gateway implementation becomes crucial for enterprise TMS. Configure policies per route, user, IP, and API key with granular limits per second, minute, hour, and day. Solutions like Zuplo allow developers to build custom rate-limiting rules directly within the platform, integrating smoothly with existing systems and meeting growing demand for smarter traffic management and automation. Use Kong, AWS API Gateway, or similar platforms to create tiered limits that understand freight workflows.

Carrier-Specific Rate Limiting Strategies

Different carriers require fundamentally different approaches. FedEx APIs might handle steady traffic well but struggle with burst patterns during holiday shipping. Regional carriers often have infrastructure that can't match enterprise-grade availability. Data in legacy systems are difficult to access and limited, but API helps overcome the limitations of data retrieval. Your rate limiting must account for these disparities.

Build carrier profiles that capture real-world performance characteristics. Monitor historical response times, error patterns, and maintenance windows. When carrier APIs show degraded performance, implement circuit breaker patterns that temporarily route traffic elsewhere while maintaining service availability.

Handle bursty traffic intelligently. When performing scheduled tasks, apply jitter to requests to avoid the thundering herd problem—try to avoid performing tasks "on the hour" for actions like daily email digests. For TMS systems, this means staggering batch operations like overnight rate updates or weekly performance reports across time windows to prevent API overload.

Advanced Implementation Patterns That Actually Work

Algorithm selection matters more than most teams realize. Fixed window counters are simplest but can lead to traffic spikes at window boundaries. For steady TMS traffic like shipment tracking, fixed windows work well. For fluctuating patterns like rate shopping, sliding windows prevent the boundary problems.

Token bucket algorithms excel for occasional bursts—exactly what happens during quarterly freight negotiations or seasonal shipping spikes. API processing limits are typically measured in Transactions Per Second (TPS), and rate limiting essentially enforces limits to TPS or the quantity of data users consume by limiting either the number of transactions or the amount of data in each transaction.

Implement tiered rate limits that reflect business reality. Enterprise customers running platforms like Cargoson, MercuryGate, or Manhattan Active need higher limits than small shippers using basic freight tools. Create subscription tiers that provide different experiences while enabling revenue generation from hobbyists to enterprise customers.

Caching strategies become force multipliers. Integrating caching solutions like Redis can significantly reduce unnecessary API calls by storing frequently requested data, preventing users from hitting rate limits unnecessarily and improving overall API responsiveness. Cache rate tables, carrier service maps, and shipment status data with appropriate TTLs to reduce API load.

Monitoring and Observability

Essential metrics go beyond simple request counts. Track request patterns by carrier, error rates by endpoint, and data volume to adjust limits dynamically. Monitor server metrics using tools to track performance in real time and set automated triggers to adjust limits gradually preventing sudden disruptions. For TMS systems, monitor freight-specific metrics: rate quote response times, booking success rates, and tracking update frequencies.

Track 429 (Too Many Requests) responses, but more importantly, track the business impact: failed bookings, delayed shipments, or customer service escalations. Set up dashboards in Datadog, New Relic, or Grafana that show both technical metrics and business outcomes.

Transparency in communicating API rate limits is the hallmark of good API stewardship—building trust with users by making them aware of engagement rules. Platforms like LinkedIn and GitHub provide users access to their rate limit status through analytics dashboards and API endpoints, allowing users to monitor usage, view quotas, and receive alerts. For enterprise TMS, provide similar visibility so customers can optimize their integration patterns.

Lessons from High-Volume TMS Deployments

Real-world deployments reveal patterns you won't find in documentation. Cloud-native platforms like FreightPOP, Shippo, and Cargoson typically deploy in 6-12 weeks while enterprise solutions require 6-12 months for full rollout, but speed isn't everything—user experience determines long-term success as transport planners under pressure will revert to familiar tools if the new system isn't intuitive.

PayPal's treasury team demonstrated the value of proper system integration, saving 3,500 hours annually through proper system integration. For TMS systems, similar savings come from intelligent rate limiting that prevents manual workarounds during API failures.

Common failure patterns emerge repeatedly across enterprise deployments. Teams underestimate the coordination complexity between rate limits across microservices. They implement carrier-agnostic limits that don't account for individual carrier API capabilities. Most critically, they focus on preventing system overload rather than maintaining business operations during traffic spikes.

Adoption is a leading indicator for ROI—target >80% adoption within the first year measured by percentage of bookings made through the TMS versus email and Excel workarounds. Measure adoption through system usage metrics: login frequency, transactions completed, and user satisfaction scores as leading indicators that predict whether ROI projections will materialize.

Building Rate Limiting That Scales With Your Enterprise

Implementation benefits extend beyond system protection. Proper rate limiting implementation can reduce API outages by up to 40% while maintaining availability. For enterprise TMS systems, this translates to fewer manual interventions, reduced customer service calls, and higher overall system reliability.

Best practices center on developer-first monitoring and dynamic limits that adapt to real-world scaling demands. Create tiered limits based on subscription plans, but make them transparent and business-aligned. Prepare for extremes by including fallback mechanisms for handling unusually high loads, and for distributed systems, ensure rate limit changes are applied consistently with synchronized caches and automated recovery processes.

Future-proof your strategy as freight volumes continue growing and carrier API capabilities evolve. The TMS market is expected to reach $3.47 billion by 2030 at 8.92% CAGR, with acceleration stemming from enterprises replacing capital-heavy on-premises tools with scalable cloud platforms that deliver rapid deployment, lower total cost of ownership, and real-time operational visibility.

Modern platforms like Cargoson, Blue Yonder, and Oracle TM are building API-first architectures specifically designed to handle enterprise rate limiting complexity. When evaluating solutions, prioritize platforms that understand freight-specific traffic patterns rather than generic API management tools.

The enterprise TMS rate limiting challenge isn't just technical—it's about maintaining business velocity while protecting system stability. Start with freight-aware algorithms, implement carrier-specific strategies, monitor business outcomes alongside technical metrics, and build systems that scale with your operation rather than constraining it.