Carrier API Rate Limiting Under Fire: How to Stress-Test Your Integration Before Production Burns

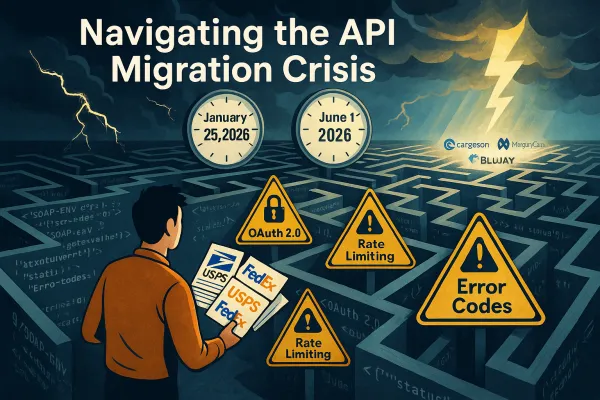

Your carrier integration isn't ready for production until you've pushed its rate limiting to the breaking point. Research shows 75% of API issues stem from mishandled rate limits, with error rates jumping beyond 5% and response times crossing 500ms thresholds when systems buckle under load.

Traditional ping tests and basic health checks won't save you when Black Friday traffic hits or when your batch processing system tries validating 500 addresses simultaneously. You need a systematic approach to stress-testing that reveals exactly how your carrier API integrations behave when pushed beyond their comfort zone.

The Rate Limiting Reality Check

DHL's test environment limits you to 500 service invocations daily, but their production thresholds operate differently. Static rate limiting approaches that worked in 2020 are failing spectacularly in 2025's complex API ecosystem.

The problem isn't just volume. Bulk processing scenarios create cascading failures that simple rate limit headers don't prepare you for. When your system tries creating 200 labels in rapid succession or validating hundreds of addresses for a large shipment, carriers like DHL start with basic limits of 250 calls per day with maximum 1 call every 5 seconds before requiring approval for higher thresholds.

Most integration engineers test happy path scenarios with a handful of requests. Real production breaks happen when your retry logic creates thundering herd problems, when webhook delays stack up during peak hours, or when failover systems all hit the same backup carrier simultaneously.

European Carrier API Rate Limit Landscape

European carriers structure their rate limits differently than their US counterparts. DHL bases minimum daily calls on average monthly shipping volume, allowing up to 10 fetches per shipment per day and expects these calls distributed evenly throughout the day.

UPS tracking starts with 250 calls daily at 1 call per 5 seconds, with additional rate limits granted based on specific use cases. The approval process varies significantly between carriers, with some requiring proof of shipping volume and others focusing on technical implementation quality.

FedEx and DSV each have their own thresholds and burst allowances, while DB Schenker applies different limits to their various API services. Multi-carrier platforms like EasyPost, ShipEngine, nShift, and Cargoson add another abstraction layer, but their rate limiting doesn't eliminate the underlying carrier restrictions.

Building Your Rate Limiting Test Harness

Effective stress testing requires systematic escalation beyond normal operational parameters. Monitor server metrics in real time, set automated triggers to adjust limits gradually, and prepare fallback mechanisms for handling unusually high loads.

Tools like k6 and Artillery make load testing accessible for developers and QA engineers, supporting different test types including stress tests, spike tests, soak tests, and smoke tests. Here's a k6 script designed specifically for carrier API stress testing:

import http from 'k6/http';

import { check, sleep } from 'k6';

export let options = {

stages: [

{ duration: '2m', target: 10 }, // Baseline

{ duration: '3m', target: 50 }, // 10% above normal

{ duration: '2m', target: 100 }, // 50% above normal

{ duration: '2m', target: 200 }, // 200% above normal

{ duration: '3m', target: 0 }, // Recovery

],

thresholds: {

http_req_duration: ['p(95)<500'], // 95% under 500ms

http_req_failed: ['rate<0.05'], // Error rate under 5%

},

};

export default function() {

let response = http.get('https://api-sandbox.dhl.com/track/shipments', {

headers: { 'DHL-API-Key': 'your-key-here' }

});

check(response, {

'status is 200': (r) => r.status === 200,

'not rate limited': (r) => r.status !== 429,

'response time ok': (r) => r.timings.duration < 500,

});

sleep(Math.random() * 2 + 1); // 1-3 second delay

}Your test environment should mirror production constraints. Establish baseline performance with normal load patterns before progressively increasing request rates. Different test types serve specific purposes: stress tests discover peak traffic limits, spike tests reveal sudden traffic surge behavior.

Test Scenario Design Matrix

Design your test scenarios around realistic failure conditions, not arbitrary numbers. Start with 10% above your normal peak load, then scale to 50% above, and finally push to 200% above normal capacity.

Concurrent user simulation matters more than raw request volume. Artillery makes it simple to send 25 virtual users per second for one minute, with configuration controlling both duration and user arrival rates. But your tests need to reflect actual usage patterns:

- Address validation bursts: 50-200 address validations in rapid succession

- Label creation spikes: Batch processing 100+ labels during peak shipping hours

- Tracking polling storms: Multiple systems querying the same tracking numbers

- Webhook replay scenarios: Failed webhooks triggering retry cascades

Time-based scenarios reveal different failure modes. Test during carrier maintenance windows, simulate peak hours across different time zones, and measure how your system behaves during sustained load over hours rather than minutes.

Failure Mode Analysis and Response Testing

Carrier APIs fail in predictable patterns, but each failure requires different recovery strategies. When applications exceed rate limits, APIs respond with 429 Too Many Requests status codes, but the recovery mechanisms vary significantly between carriers.

DHL's infrastructure protection kicks in differently than UPS's throttling mechanisms. Some carriers implement hard blocks that require waiting for reset windows, while others use sliding windows that allow gradual recovery. Your test harness must identify these patterns and verify your recovery logic handles each appropriately.

Implement throttling by slowing down requests for clients who exceed rate limits rather than blocking them entirely, using delayed request processing or queue systems. Test your circuit breaker patterns with sustained 429 responses, measure how long recovery takes, and verify that your jitter implementation prevents thundering herd problems.

Recovery Pattern Testing

Retry mechanisms with exponential backoff and jitter prevent coordinated retry storms that can overwhelm recovering systems. When rate limits are hit, implement retry mechanisms that intercept failed requests and retry when rate limits allow.

Your recovery testing should simulate realistic failure scenarios:

- Sustained 429 responses for 5-10 minutes

- Intermittent rate limiting during peak periods

- Complete carrier API downtime with failover testing

- Partial service degradation affecting only certain endpoints

Test failover logic by forcing primary carriers into rate limit states and measuring how quickly your system shifts to backup carriers. Enterprise TMS solutions like MercuryGate, Descartes, and Cargoson typically handle these transitions more gracefully than custom integrations.

Production Monitoring and Adaptive Response

Dynamic rate limiting can cut server load by up to 40% during peak times while maintaining availability. Modern API gateways adjust limits based on real-time conditions rather than static thresholds.

Your monitoring strategy should track key indicators that predict rate limiting problems before they occur. Monitor request volume for traffic surges, error rates exceeding 5%, response times crossing 500ms, using adaptive algorithms like Token Bucket and Sliding Window for real-time adjustments.

Implement comprehensive monitoring tools that track:

- Request patterns and velocity changes

- Response time percentiles across different endpoints

- Error rate trends and 429 response frequency

- Queue depths and processing delays

Set up automated responses that gradually reduce request rates when approaching thresholds rather than waiting for hard limits. Ensure rate limit changes are applied consistently across distributed systems, with synchronized caches and automated recovery processes.

Alert Thresholds and Escalation

Configure alerts based on leading indicators rather than reactive metrics. When your 95th percentile response time climbs above 400ms, that's your early warning system. When 429 responses exceed 1% of total requests, you're already in trouble.

Dashboard design should prioritize actionable metrics over vanity numbers. Track request success rates, average response times, and error distribution patterns. Build escalation workflows that automatically adjust request rates and notify relevant teams when manual intervention becomes necessary.

Case Study: Multi-Carrier Rate Limit Orchestration

During a recent stress test across DHL, UPS, and FedEx APIs simultaneously, we discovered that each carrier's rate limiting behaved differently under sustained load. While Artillery uses more declarative configuration and k6 uses imperative scripting, k6 generally proves faster and more lightweight for high-traffic simulation.

The test revealed that DHL's sliding window approach allowed burst capacity recovery within minutes, while UPS's fixed window required waiting full reset periods. FedEx showed the most aggressive throttling but provided clearer rate limit headers for prediction.

Multi-carrier platforms handle this complexity differently. EasyPost abstracts rate limiting behind their own quotas, ShipEngine provides carrier-specific insights, nShift offers enterprise-grade rate limit management, and Cargoson implements sophisticated load balancing across carriers to minimize rate limiting exposure.

The key insight: your integration logic must adapt to each carrier's specific rate limiting personality while maintaining consistent behavior for your upstream applications. Test early, test frequently, and measure everything. Your production environment will thank you when Black Friday traffic hits.